Source Components #

Source Components are the first Nodes in a valid Workflow. They allow you to Extract Data from files, databases, Fusion Registry 10 or any other data source by using the Script Source.

Before you can add valid Source Components to the

Workflow, a user with the predef_source_management

Permission should

Create a Predefined Source.

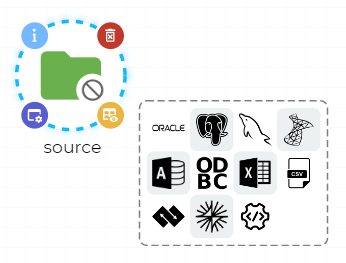

When you first drop a Source Component onto the Workspace, a small rectangle will appear with icons representing various available data sources:

- an Oracle Database table or SQL

query,

- an Oracle Database table or SQL

query, - a PostgreSQL Database table

or SQL query,

- a PostgreSQL Database table

or SQL query, - a MySQL Database table

or SQL query,

- a MySQL Database table

or SQL query, - a Microsoft SQL Server

Database table or SQL query,

- a Microsoft SQL Server

Database table or SQL query, - a Microsoft Access file,

- a Microsoft Access file, - an ODBC Database table or SQL query,

- an ODBC Database table or SQL query, - a single Sheet from an Excel file,

- a single Sheet from an Excel file, - a CSV file,

- a CSV file, - an SDMX-ML file,

- an SDMX-ML file, - Data from a Fusion Registry 10 Dataflow,

- Data from a Fusion Registry 10 Dataflow, - a custom script that collects data and converts

it into a Python Pandas DataFrame.

- a custom script that collects data and converts

it into a Python Pandas DataFrame.

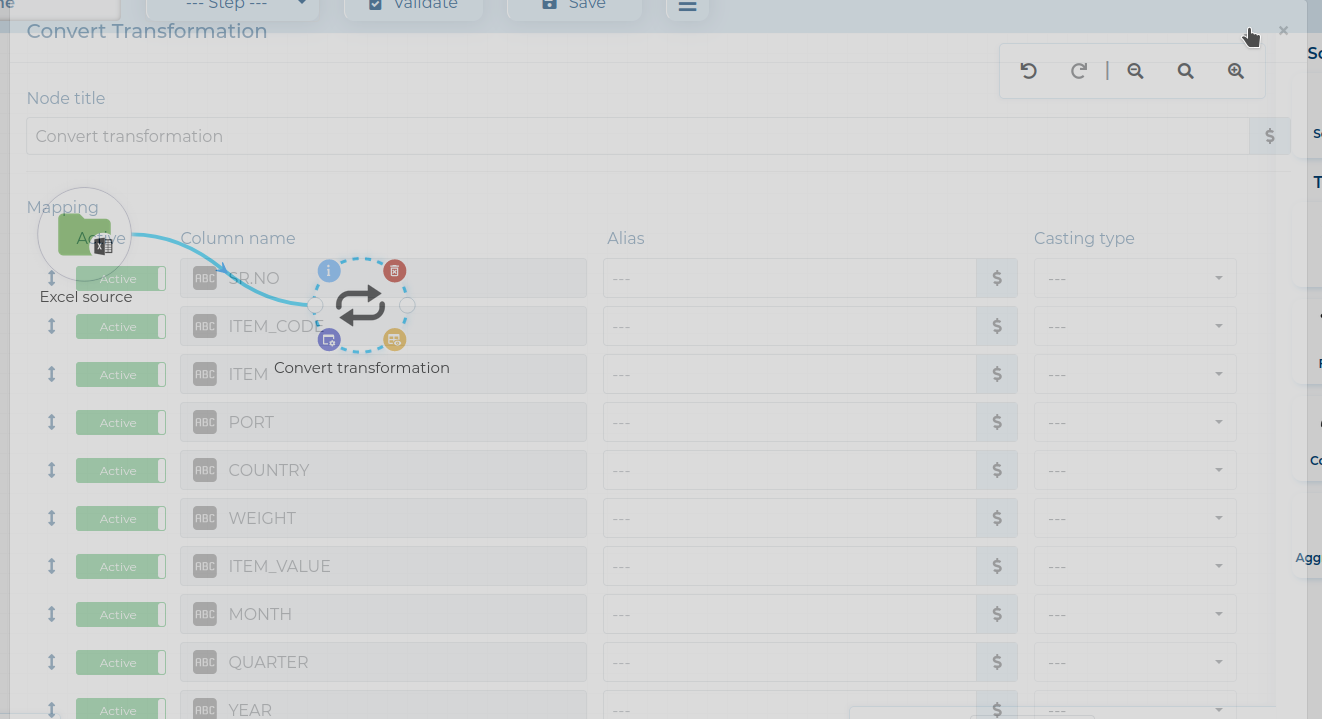

When you click any of the icons, it will open a new popup for configuring that specific data source.

Once you select a specific data source from the list, that specific Source Component will only Extract data from that chosen data source. To choose a new data source, you need to remove that Source Component and drop a new one onto the Workspace.

If you do not select any data source, the Node will disappear once you click anywhere on the workspace.

When you click the Minimalize button in the top right corner of the window (circled red), the window will become transparent, allowing you to see the Workspace.

Clicking the Save button will first validate the configuration. If any errors occur, an error message will appear under the field that was not configured correctly. If the configuration is valid, the window will close, and you can return to modifying your Modifier.

If you can modify any data in the configuration windows with Process Parameters, a smallicon will be displayed on the left of the field that can be configured by it.

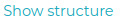

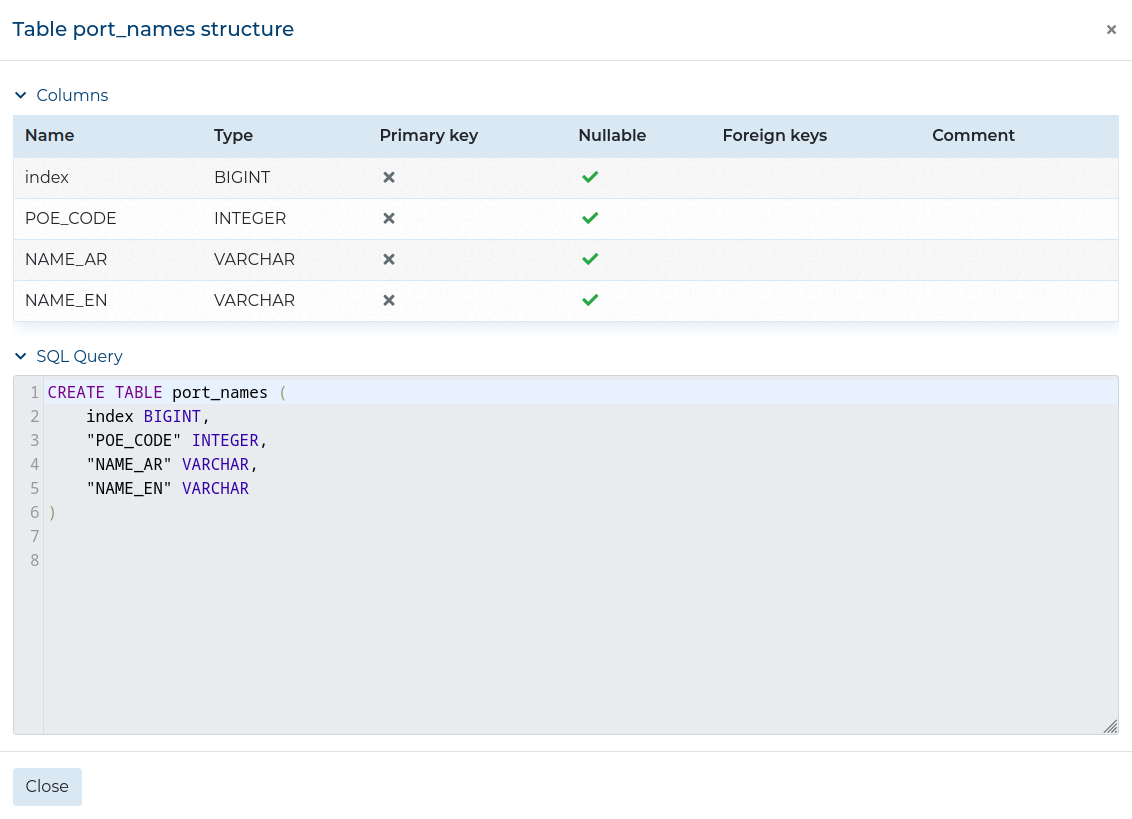

Database sources and the Fusion Registry 10 data source allow you to view the table structure by clicking the Show structure button.

This opens a window showing the structure of the data source.

Examples

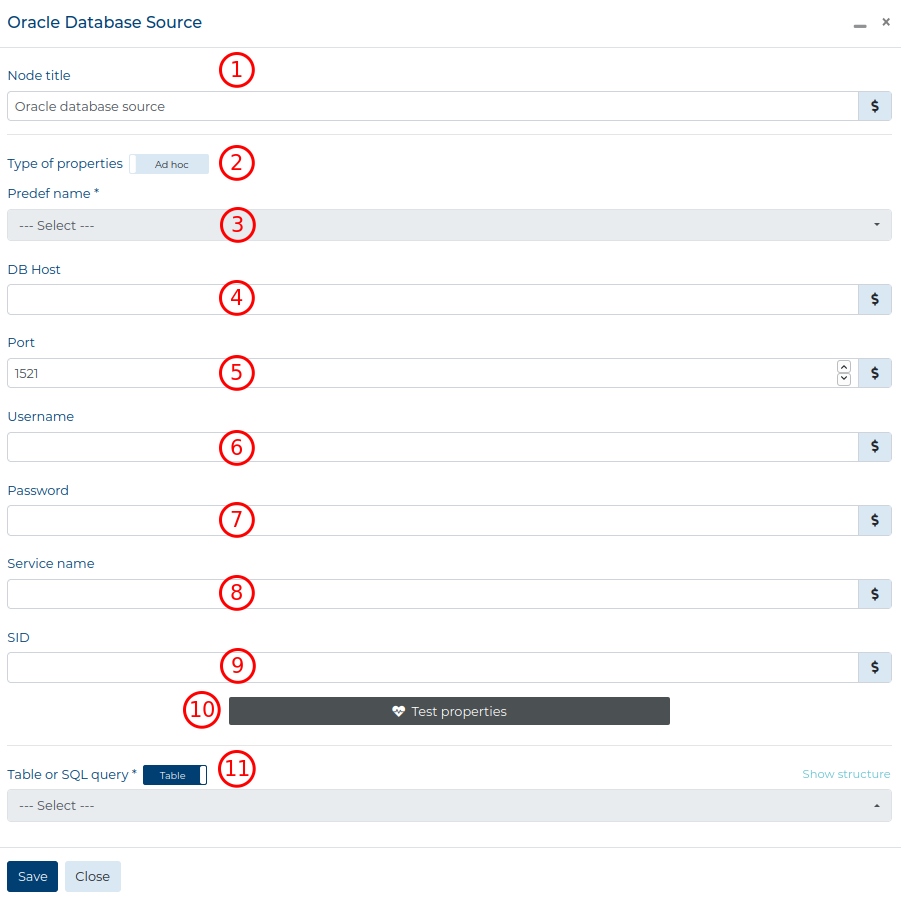

Oracle Data Source #

In the configuration window for the Oracle data source, you need to fill out the following fields:

- Node title - the title of this Node that will be displayed on the Workspace and in the Data Preview window (Optional),

- Type of properties - select whether to use one of the Predefined Sources or create an ad hoc source connection,

- Predef name - the name of the Predefined Source; after

selecting one, all the grey fields will be filled out automatically (Required when

Predefinedis selected), - DB Host - the address where the database is located (Required when

Ad hocis selected), - Port - the port of the address (Required when

Ad hocis selected), - Username - the username with access to the database (Required when

Ad hocis selected), - Password - the password needed to access the database (Required when

Ad hocis selected), - Service name - the alias of the database; (use either Service name

or SID) (Required when

Ad hocis selected), - SID - the unique name of the database; (use either Service name

or SID) (Required when

Ad hocis selected), - Test properties / Edit properties (only visible when

Ad hocis selected) - button to check if the input fields create a valid connection. If the connection is valid, changes to Edit properties which allows changing the above fields, - Table or SQL query - either choose the name of the database table (toggled to

Table) or provide an SQL query to extract data (toggled toSQL).

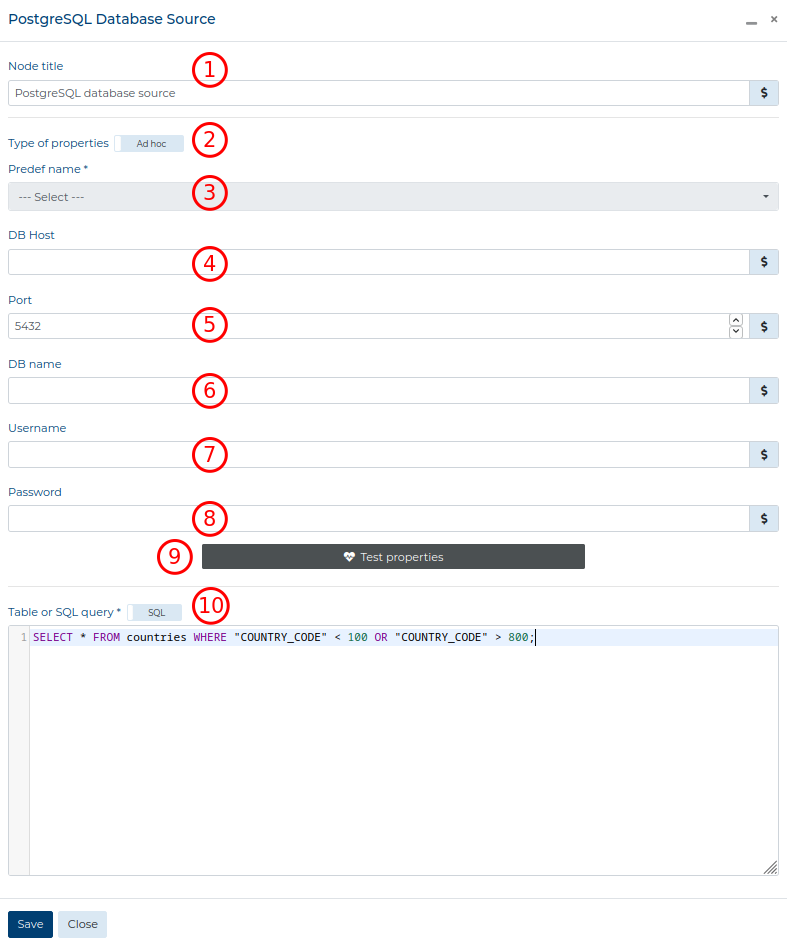

PostgreSQL Data Source #

In the configuration window for the PostgreSQL data source, you need to fill out the following fields:

- Node title - the title of this Node that will be displayed on the Workspace and in the Data Preview window (Optional),

- Type of properties - select whether to use one of the Predefined Sources or create an ad hoc source connection,

- Predef name - the name of the Predefined Source; after

selecting one, all the grey fields will be filled out automatically (Required when

Predefinedis selected), - DB Host - the address where the database is located (Required when

Ad hocis selected), - Port - the port of the address (Required when

Ad hocis selected), - DB name - the database that we want to access (Required when

Ad hocis selected), - Username - the username with access to the database (Required when

Ad hocis selected), - Password - the password needed to access the database (Required when

Ad hocis selected), - Test properties / Edit properties (only visible when

Ad hocis selected) - button to check if the input fields create a valid connection. If the connection is valid, changes to Edit properties which allows changing the above fields, - Table or SQL query - either choose the name of the database table (toggled to

Table) or provide an SQL query to extract data (toggled toSQL).

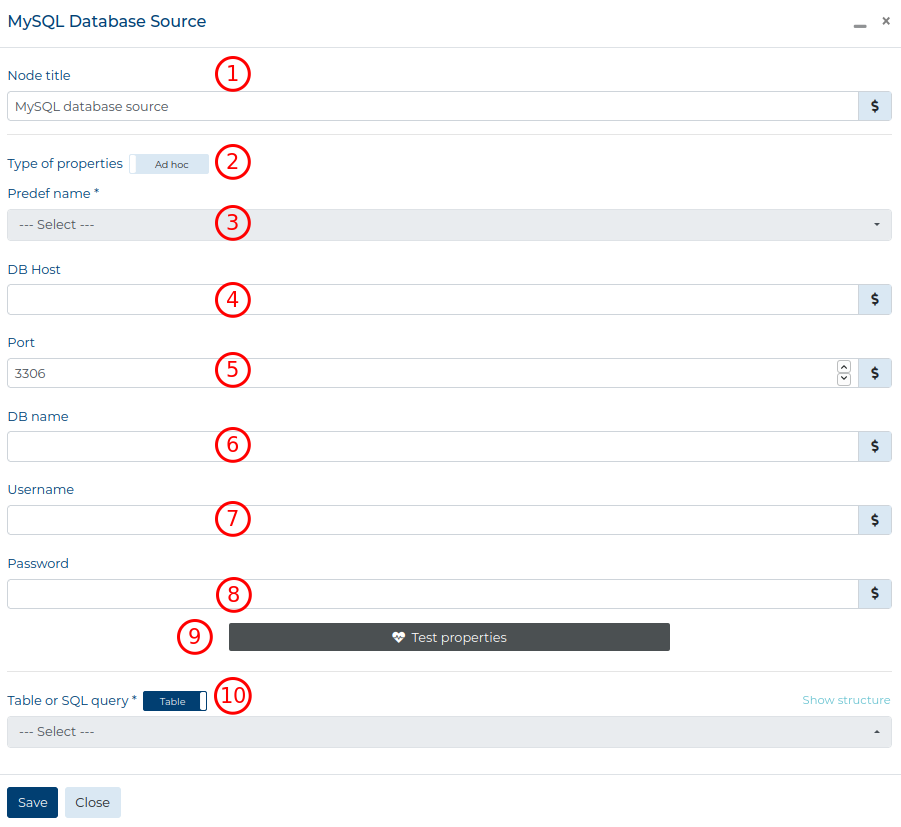

MySQL Data Source #

The configuration of the MySQL data source is similar to the PostgreSQL Data Source.

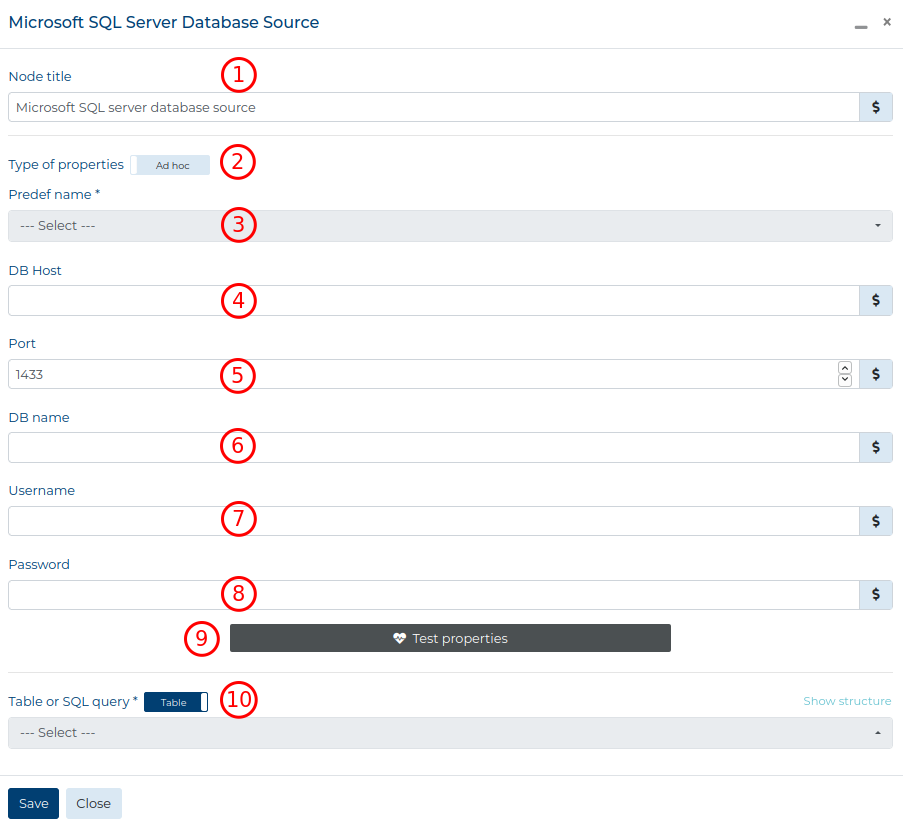

Microsoft SQL Server Data Source #

The configuration of the Microsoft SQL Server data source is similar to the PostgreSQL Data Source.

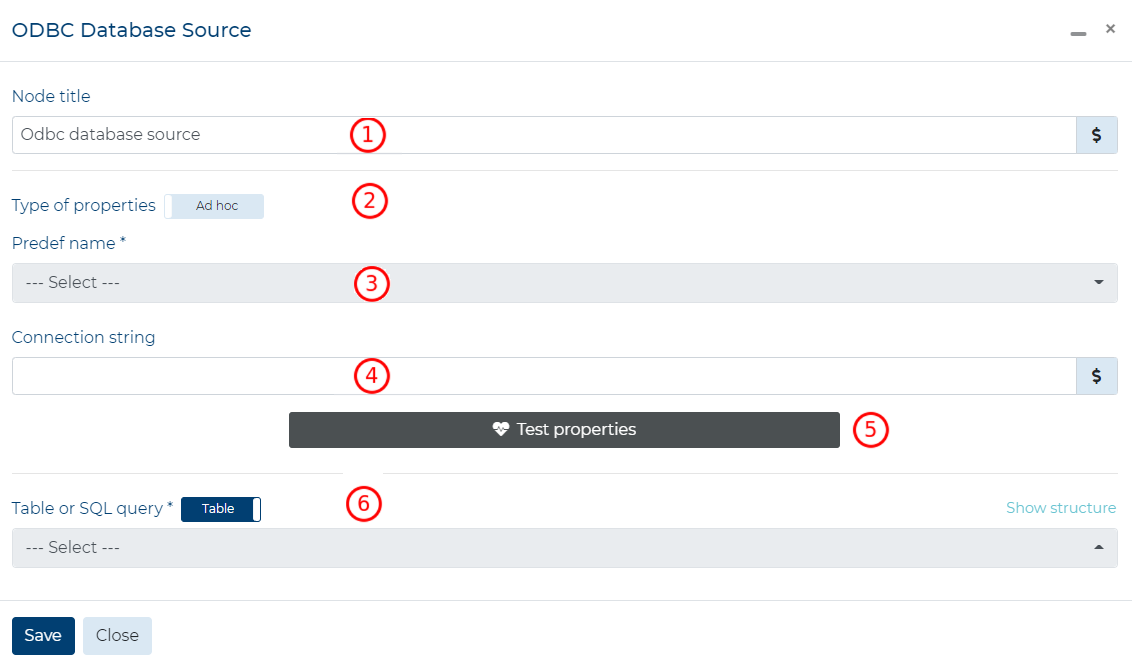

ODBC Data Source #

In the configuration window for the ODBC data source, you need to fill out the following fields:

- Node title - the title of this Node that will be displayed on the Workspace and in the Data Preview window (Optional),

- Type of properties - select whether to use one of the Predefined Sources or create an ad hoc source connection,

- Predef name - the name of the Predefined Source; after

selecting one, all the grey fields will be filled out automatically (Required when

Predefinedis selected), - Connection string - the string needed to make a connection to the database,

- Test properties / Edit properties (only visible when

Ad hocis selected) - button to check if the input fields point to a valid file. If the file is valid, changes to Edit properties which allows changing the above fields, - Table or SQL query - either choose the name of the database table (toggled to

Table) or provide an SQL query to extract data (toggled toSQL).

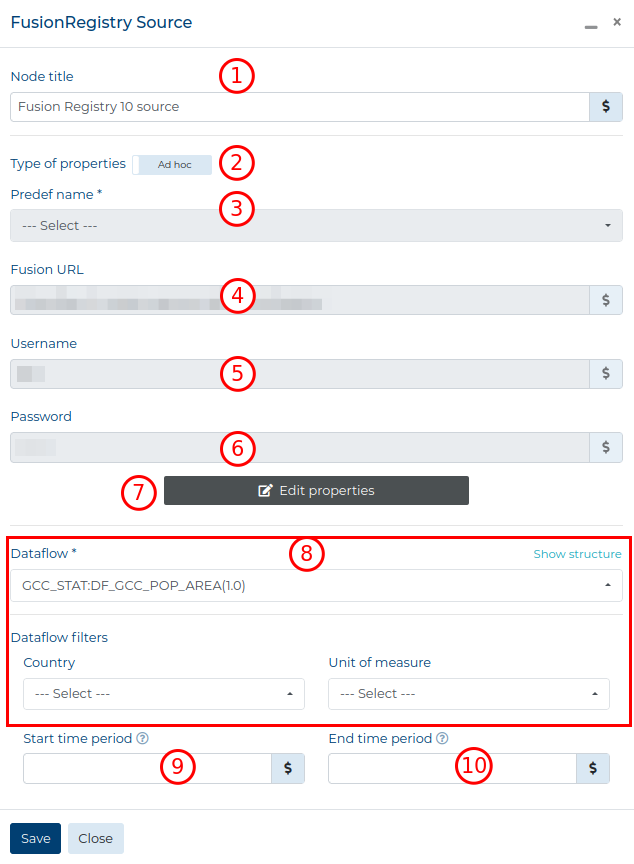

Fusion Registry 10 Data Source #

In the configuration window for the Fusion Registry 10 data source, you need to fill out the following fields:

- Node title - the title of this Node that will be displayed on the Workspace and in the Data Preview window (Optional),

- Type of properties - select whether to use one of the Predefined Sources or create an ad hoc source connection,

- Predef name - the name of the Predefined Source; after

selecting one, all the predefined fields will be filled out automatically (Required when

Predefinedis selected), - Fusion URL - the URL address of the Fusion Registry instance (Required when

Ad hocis selected), - Username - the username with access to Fusion Registry dataflows (Required when

Ad hocis selected), - Password - the password needed to access Fusion Registry dataflows (Required when

Ad hocis selected), - Test properties / Edit properties (only visible when

Ad hocis selected) - button to check if the input fields create a valid connection. If the connection is valid, changes to Edit properties which allows changing the above fields, - Dataflow - select the Dataflow from which you want to extract data,

- Dataflow filters - choose how to filter out the extracted data; this is Optional, but if you intend to filter out the data, filling out these fields might greatly improve the speed of Extracting data from this data source; the number of filters depends on the dimensions in the Dataflow,

- Start time period - provide the time period from which to extract data; this depends

on the type of the time period used in collecting data in the chosen Dataflow; hover on the

icon to see example valid values; this field is Optional,

- End time period - provide the time period to which to extract data; this depends

on the type of the time period used in collecting data in the chosen Dataflow; hover on the

icon to see example valid values; this field is Optional.

Not filling the Start time period and End time period fields will Extract data from the earliest to the latest time period. Filling out only one of the fields will filter data from or to that time period respectively. Providing both will only extract data that matches the range between the Start time period and End time period.

All column types are set to string when extracting data using the Fusion Registry 10 Source.

Script Data Source #

The code in the Predefined Script Source can only be changed by users with the

predef_source_management

Permission.

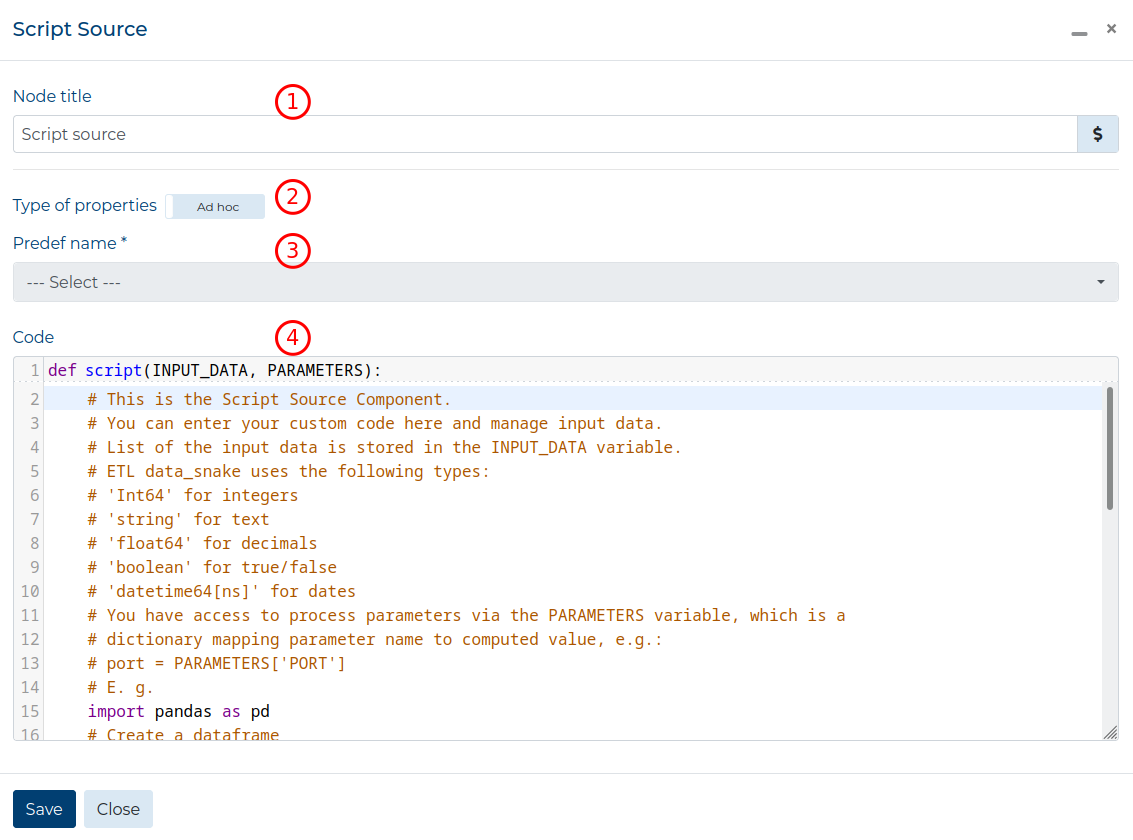

In the configuration window for the Script data source, you need to fill out the following fields:

- Node title - provide the title of this Node that will be displayed on the Workspace and in the Data Preview window (Optional),

- Type of properties - select whether to use one of the Predefined Sources or create an ad hoc source connection,

- Predef name - the name of the Predefined Source; after

selecting one, all the predefined fields will be filled out automatically (Required when

Predefinedis selected), - Script code - the code to use for extracting data; it must return a

Python Pandas DataFrame (Required when

Ad hocis selected).

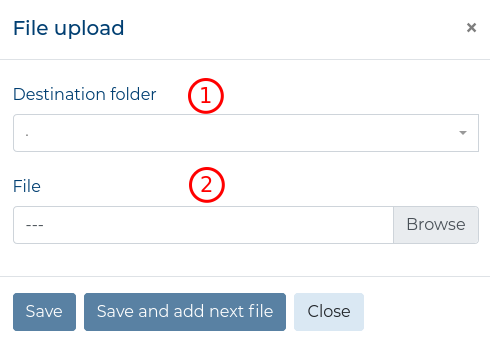

File Based Sources #

The Sources below are based on files that are uploaded to the Resources

of ETL data_snake or files situated on any place on the Internet. If you want to use

local files, then you must upload them to the Resources folder. You can do

so in the File Source configuration when you select Adhoc and click the

button. This will open a new window where you must choose

a specific folder in the Resources directory (1) and choose a file to

load there by clicking the Browse button (2).

button. This will open a new window where you must choose

a specific folder in the Resources directory (1) and choose a file to

load there by clicking the Browse button (2).

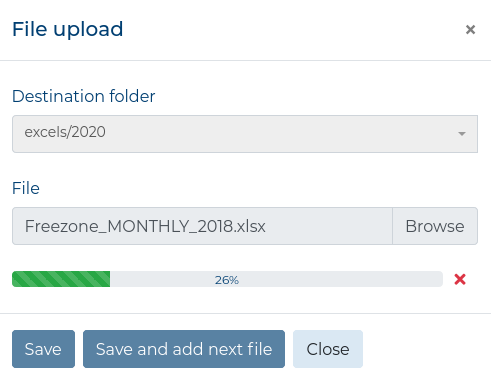

This will open a file upload popup, where you must choose one file from your local files.

When you choose a file, the upload process will start. You can cancel the process by clicking

the ![]() icon.

icon.

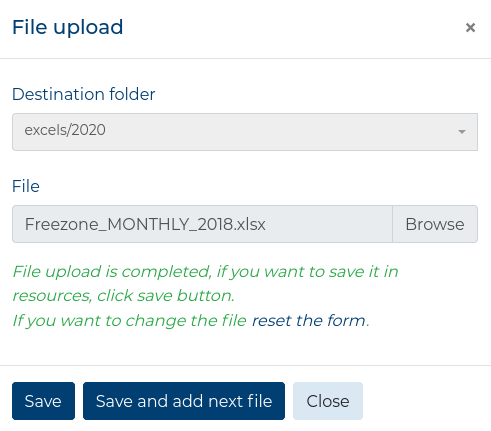

Upon completion, you will see a message informing you that you can Save the file

or reset the form to cancel the upload and choose another file. You can click the

Save and add next file button to save the file and reset the form to upload

another file. Clicking the Save button will close the modal. Clicking any

of the SAVE buttons will fill the Path field with the newly uploaded file.

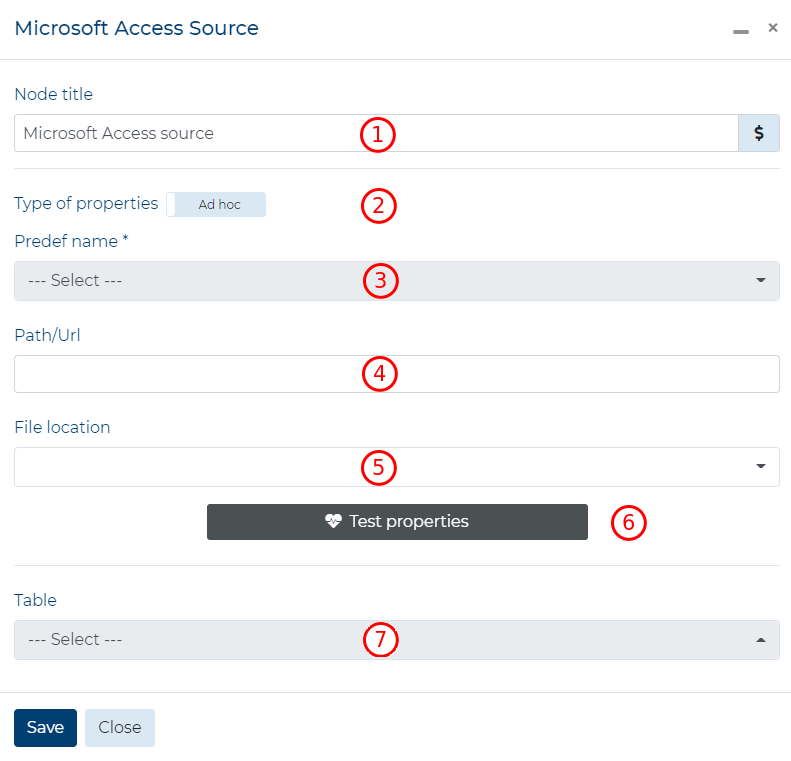

Microsoft Access Source #

In the configuration window for the Microsoft Access data source, you need to fill out the following fields:

- Node title - the title of this Node that will be displayed on the Workspace and in the Data Preview window (Optional),

- Type of properties - select whether to use one of the Predefined Sources or create an ad hoc source connection,

- Predef name the name of the Predefined Source; after

selecting one, all the grey fields will be filled out automatically (Required when

Predefinedis selected), - Path/URL - the filepath in the Resources folder or URL address

of the file (Required when

Ad hocis selected), - File location - whether the file is on the computer or on the

internet (Required when

Ad hocis selected), - Test properties / Edit properties (only visible when

Ad hocis selected) - button to check if the input fields point to a valid file. If the file is valid, changes to Edit properties which allows changing the above fields, - Table - choose the name of the table (toggled to Table)

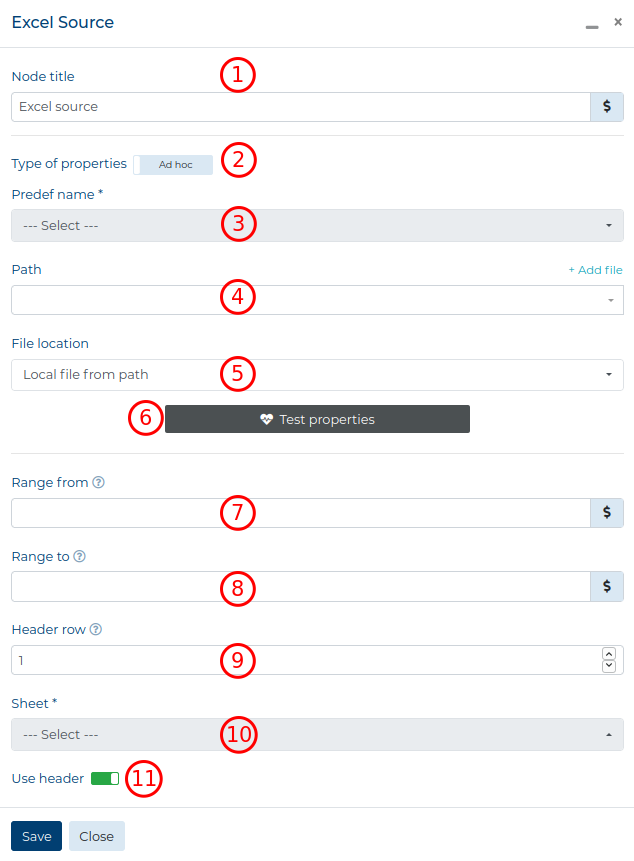

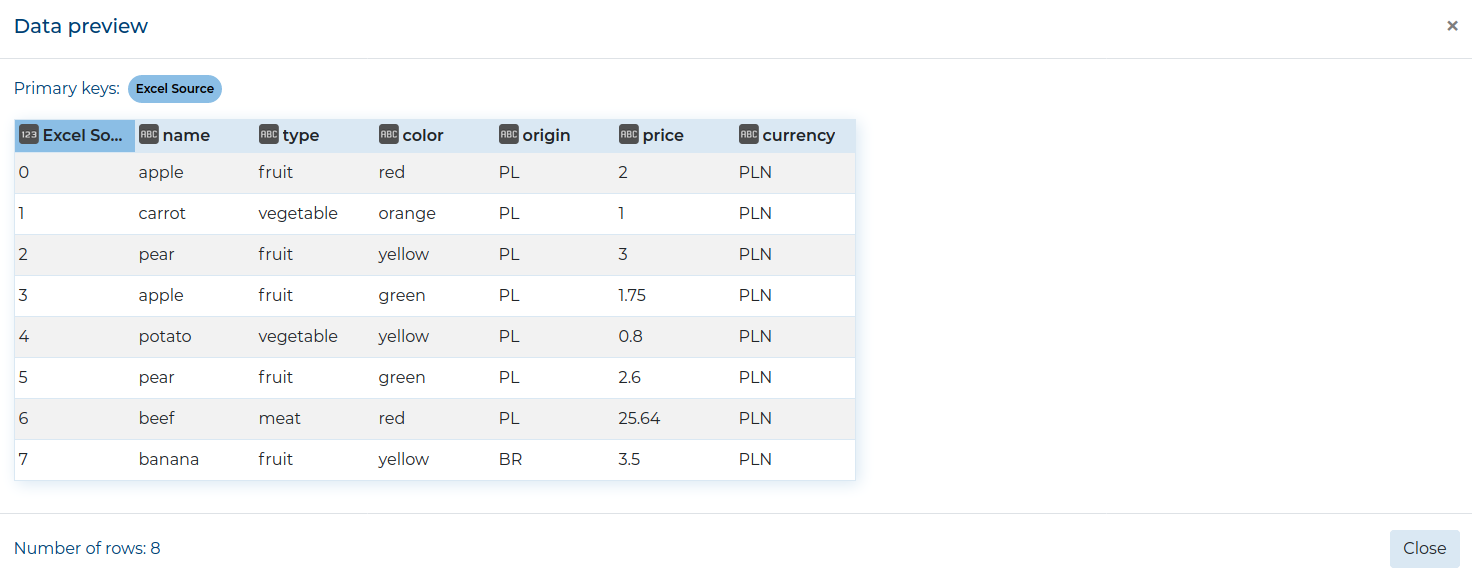

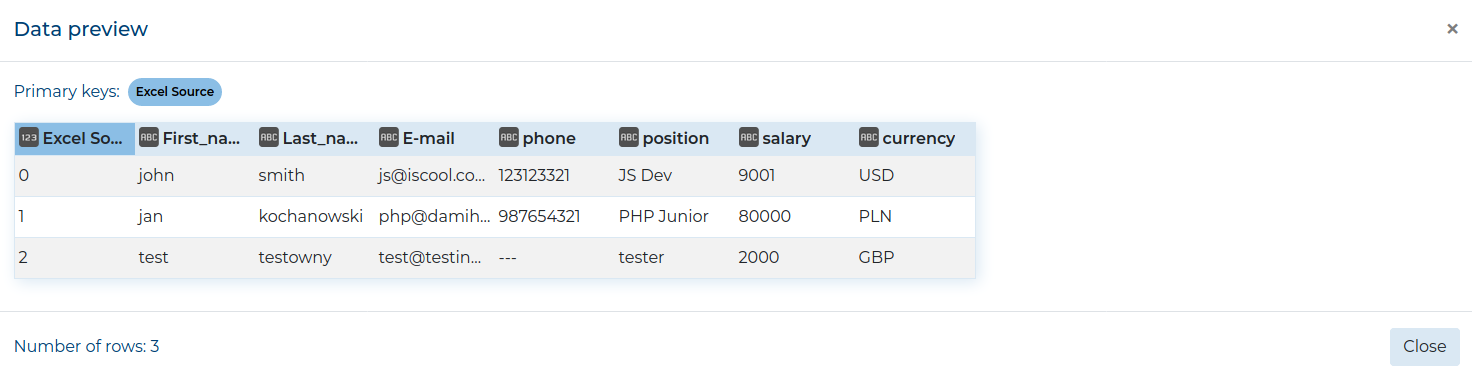

Excel Data Source #

Example usage of this source is in the Excel examples section.

In the configuration window for the Excel data source, you need to fill out the following fields:

- Node title - the title of this Node that will be displayed on the Workspace and in the Data Preview window (Optional),

- Type of properties - select whether to use one of the Predefined Sources or create an ad hoc source connection,

- Predef name - the name of the Predefined Source; after

selecting one, all the grey fields will be filled out automatically (Required when

Predefinedis selected), - Path/URL - the filepath in the Resources folder or URL address

of the file (Required when

Ad hocis selected), - File location - whether the Excel file is on the computer or on the

internet (Required when

Ad hocis selected), - Test properties / Edit properties (only visible when

Ad hocis selected) - button to check if the input fields point to a valid file. If the file is valid, changes to Edit properties which allows changing the above fields, - Range from - the cell from which to start extracting data from the Excel sheet (e.g.

if there is data in the Excel sheet from cell

A1to cellG20, then filling out this field withB3will extract the data from the Excel sheet from cellB3to cellG20); only use capital letters and integers for cell address; using lower case letters (e.g.e5) will cause an error, - Range to - the cell where the data extraction from the Excel sheet should end (e.g.

if there is data in the Excel sheet from cell

A1to cellG20, then filling out this field withE10will extract the data from the Excel sheet from cellA1to cellE10); only use capital letters and integers for cell address; using lower case letters (e.g.e5) will cause an error, - Header row - which row of extracted data should be treated as column names; this value should be set relative to the entire file, not the value in Range from; if the value is greater than the row in the cell set in Range from, then extracting will begin from the header row; Must be set only if the Use header switch is toggled on and left empty otherwise, or it will cause an error.

- Sheet - the name of the sheet to select from the Excel file,

- Use header - toggle whether the row set in Header row should be used as column names; if toggled off, the Excel sheet will be parsed with column names generated automatically as integers.

All column types are set to string when extracting data using the Excel Source.

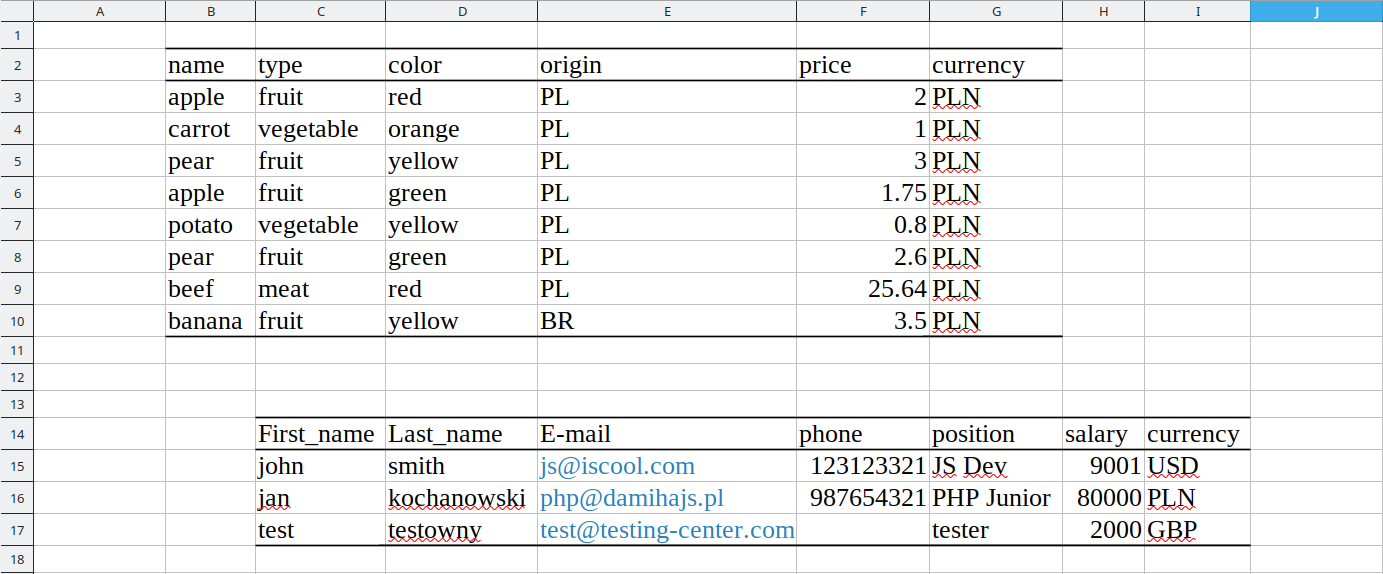

Excel examples #

To fully understand how the Excel Source works, please refer to these example configurations. All configuration will work on the following Excel file:

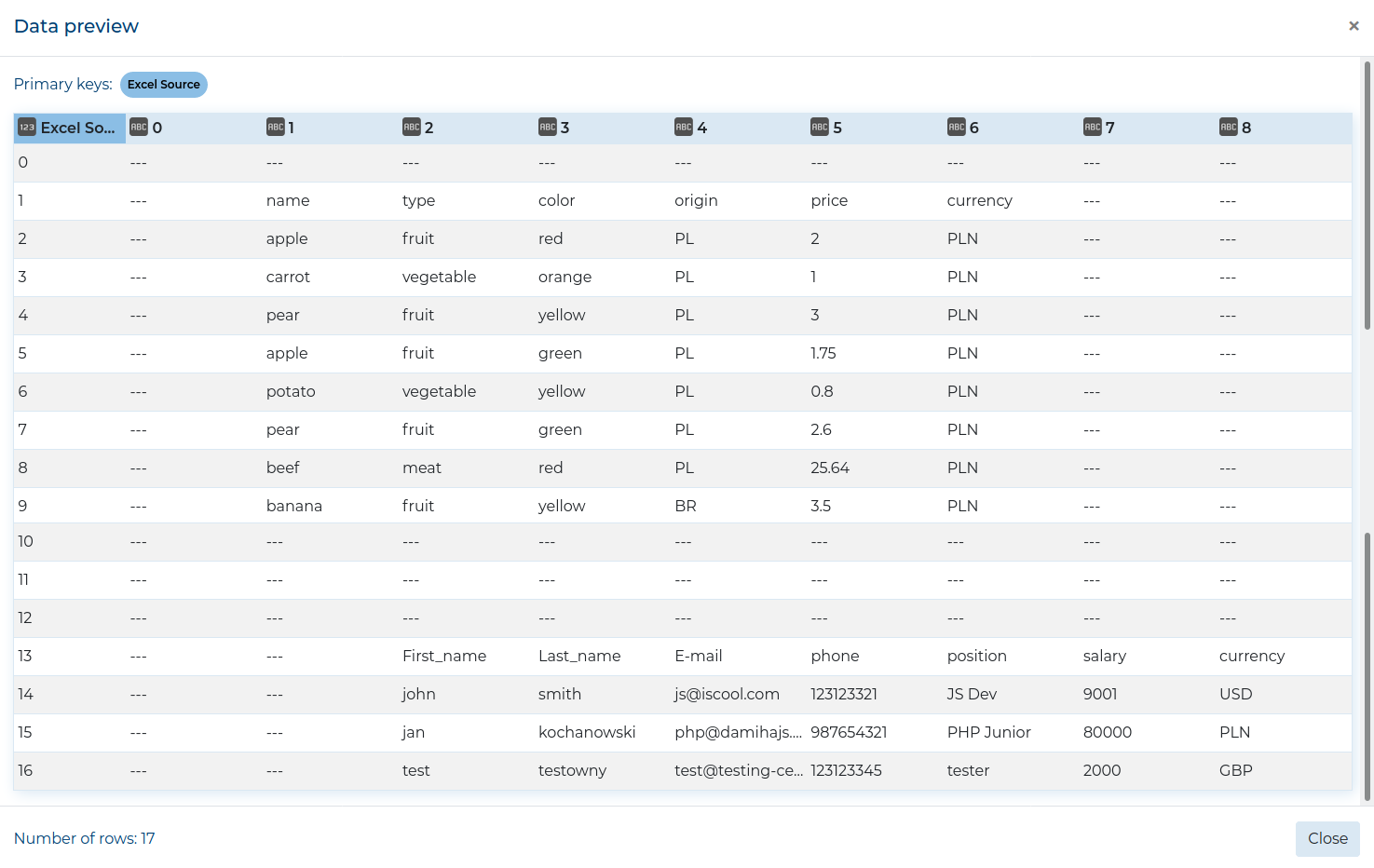

Example 1 #

- Range from - not set,

- Range to - not set,

- Header row - not set,

- Use header - toggled off,

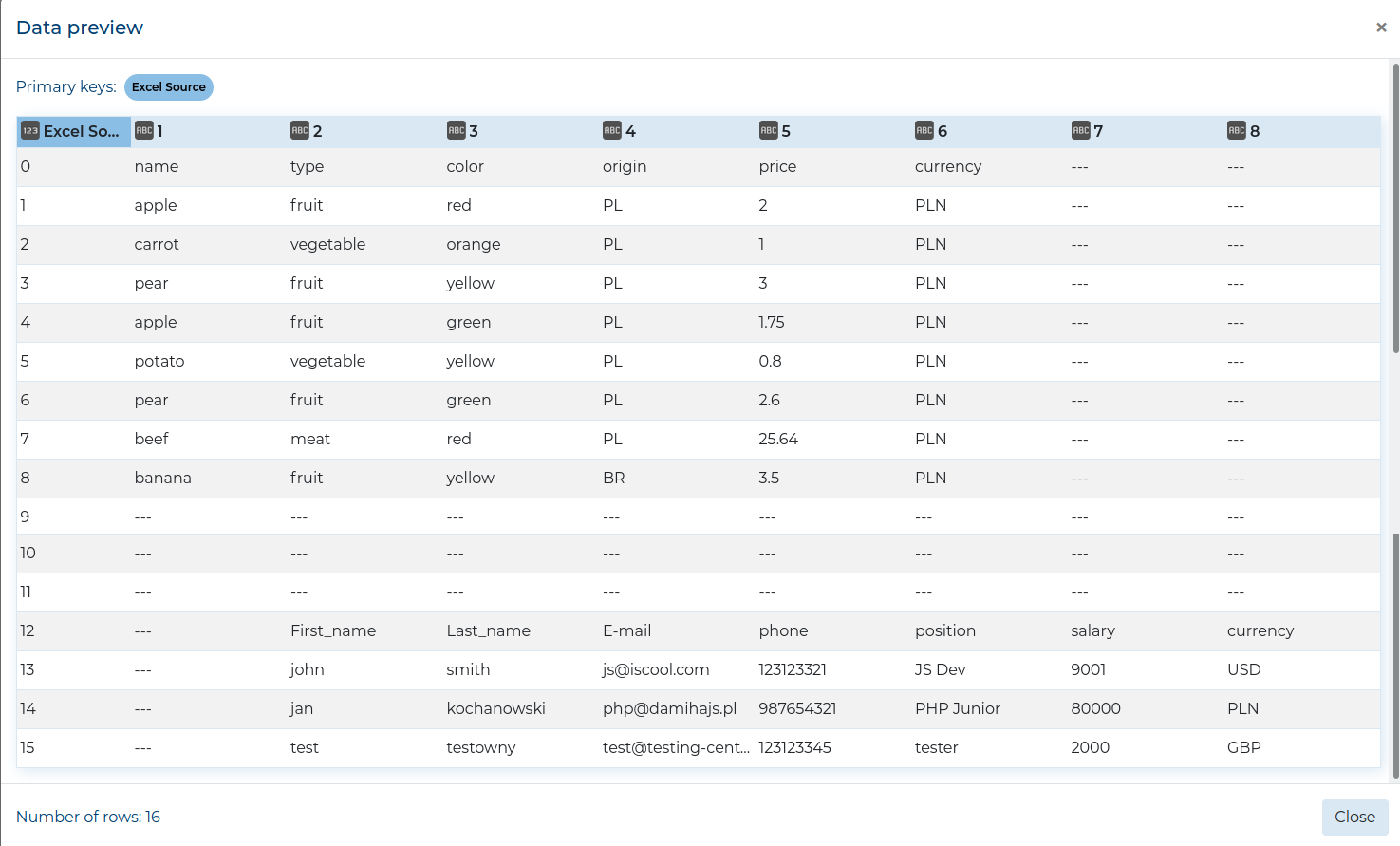

Extracted data:

ETL data_snake extracted data from A1 to the last cell that has any values (e.g. I17).

The column names are numbered from 0 to 8.

Example 2 #

- Range from -

B2, - Range to - not set,

- Header row - not set,

- Use header - toggled off,

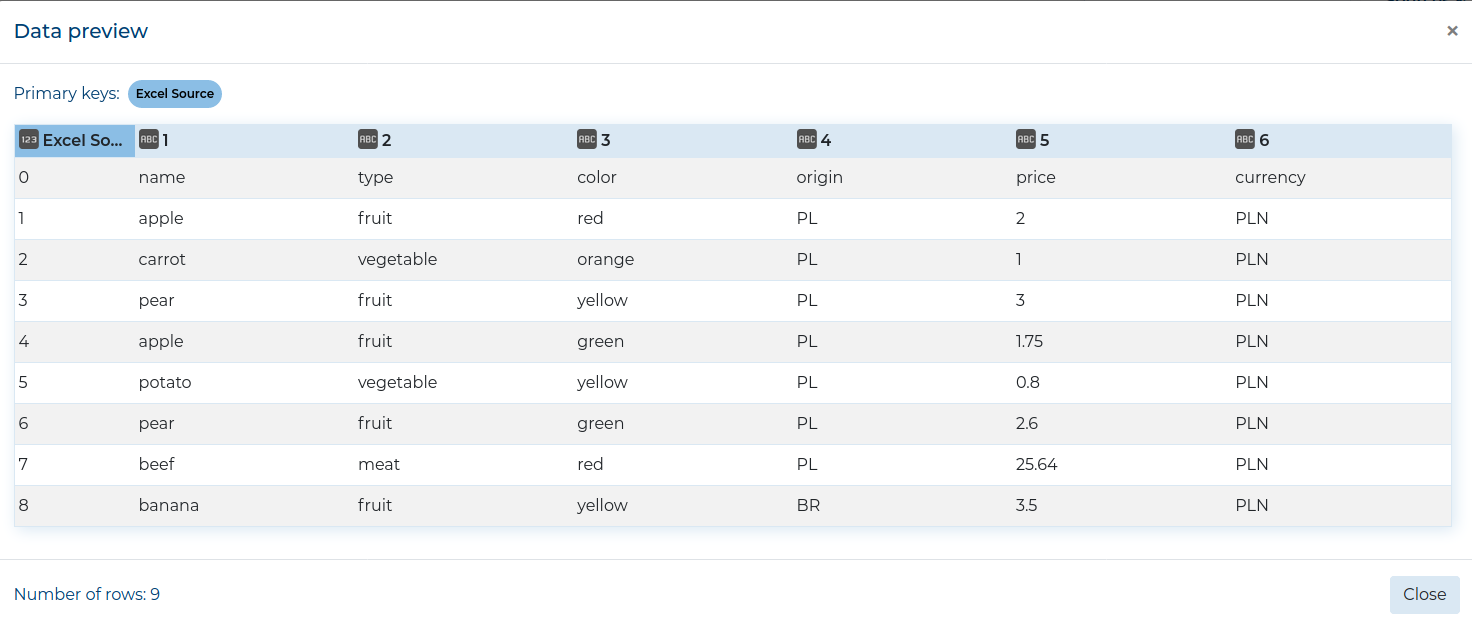

Extracted data:

ETL data_snake extracted data from B2 to the last cell that has any values (e.g. I17).

The column names are numbered from 0 to 8.

Example 3 #

- Range from -

B2, - Range to -

G10, - Header row - not set,

- Use header - toggled off,

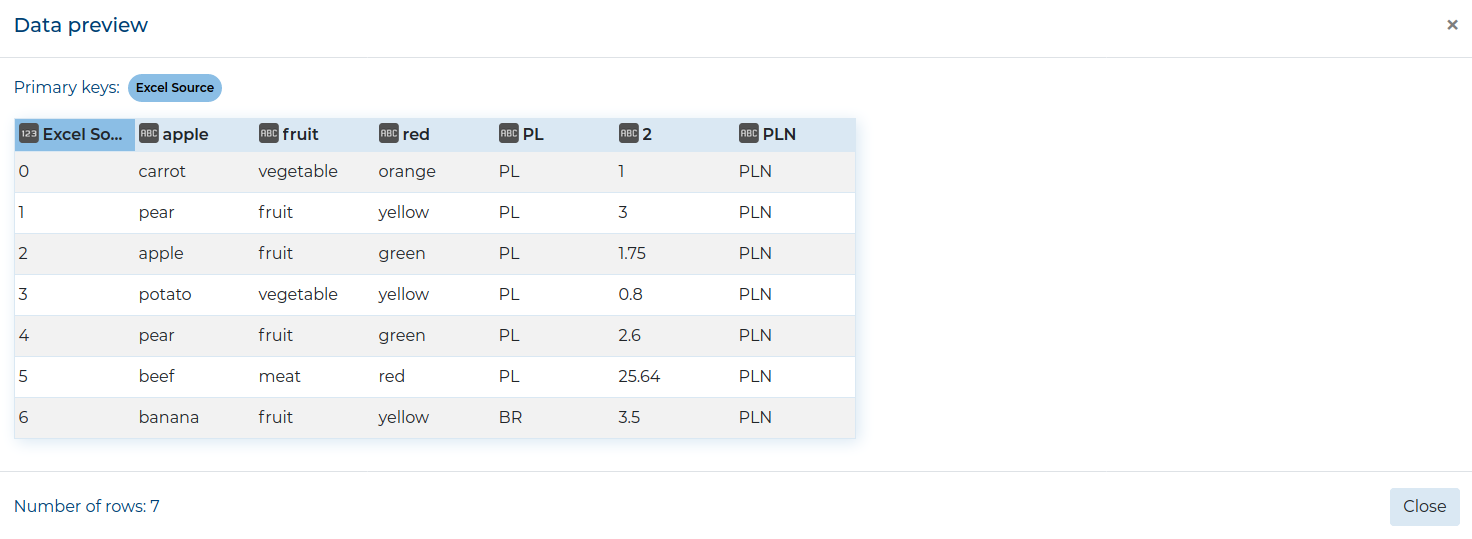

Extracted data:

ETL data_snake extracted data from B2 to G10 (only data from the first table).

The column names are numbered from 1 to 6 and the header row (from B2 to G2) is treated

as data.

Example 4 #

- Range from -

B2, - Range to -

G10, - Header row -

3, - Use header - toggled on,

Extracted data:

ETL data_snake extracted data from B3 to G10, because the Header row field was set to

3. The column names are values from B3 to G3.

Example 5 #

- Range from - not set,

- Range to -

I17, - Header row -

14, - Use header - toggled on,

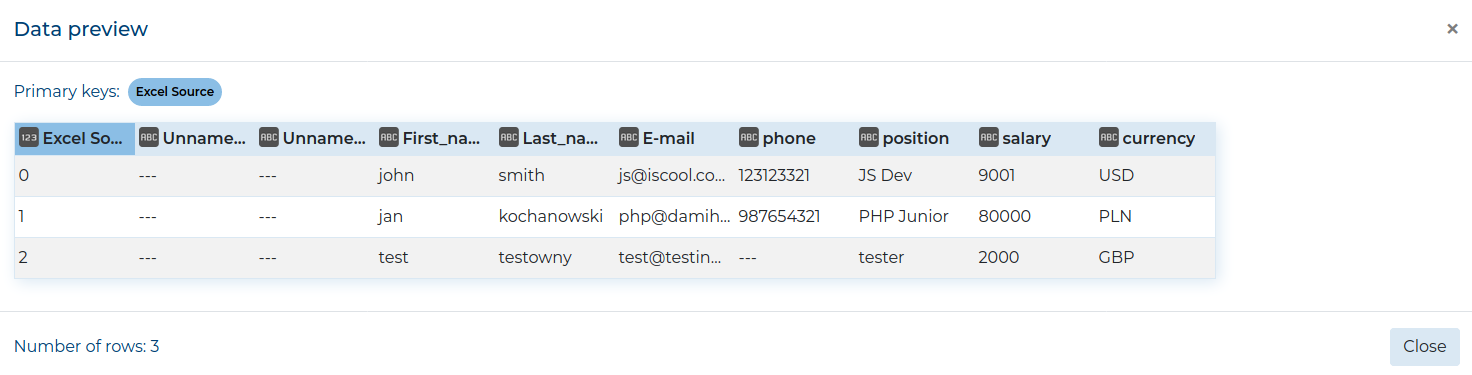

Extracted data:

ETL data_snake extracted data from A14 to I17, because the Header row field was set to

14. The column names are values from A14 to I14 (two first column names are Unnamed: 0 and

Unnamed: 1).

Correct Configuration Example 1 #

- Range from -

B2, - Range to -

G10, - Header row -

2, - Use header - toggled on,

Extracted data:

ETL data_snake extracted data from the first table in the Excel sheet and correctly named the columns.

Correct Configuration Example 2 #

- Range from -

C14, - Range to -

I17, - Header row -

14, - Use header - toggled on,

Extracted data:

ETL data_snake extracted data from the second table in the Excel sheet and correctly named the columns.

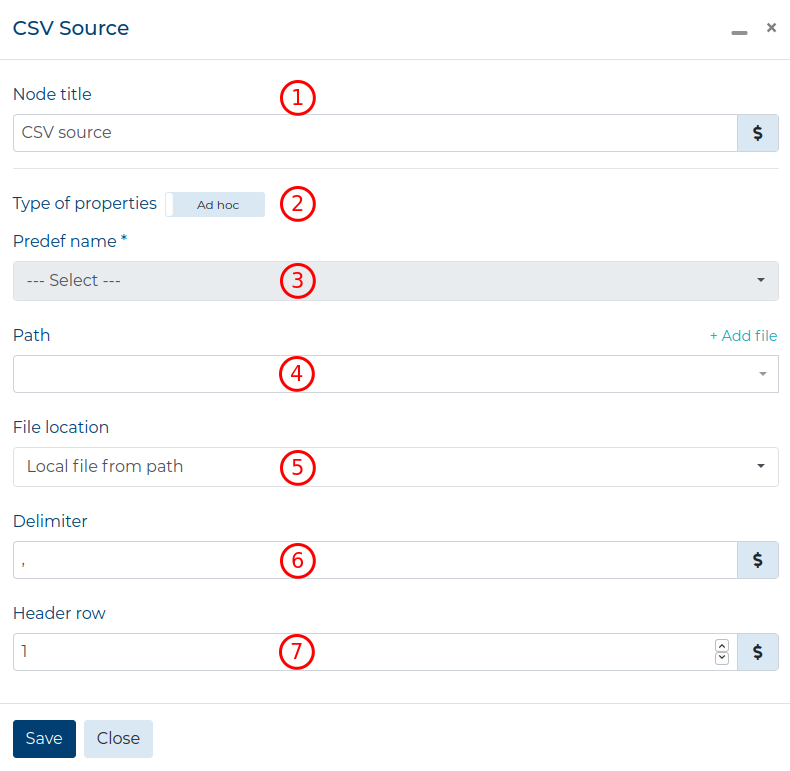

CSV Data Source #

In the configuration window for the CSV data source, you need to fill out the following fields:

- Node title - the title of this Node that will be displayed on the Workspace and in the Data Preview window (Optional),

- Type of properties - select whether to use one of the Predefined Sources or create an ad hoc source connection,

- Predef name - the name of the Predefined Source; after

selecting one, all the predefined fields will be filled out automatically (Required when

Predefinedis selected), - Path/URL - the filepath in the Resources folder or URL address

of the file (Required when

Ad hocis selected), - File location - whether the Excel file is on the computer or on the

internet (Required when

Ad hocis selected), - Delimiter - symbol used to divide the values in the CSV file (Required when

Ad hocis selected), - Header row - the line where the headers are located (Required when

Ad hocis selected).

All column types are set to string when extracting data using the CSV Source.

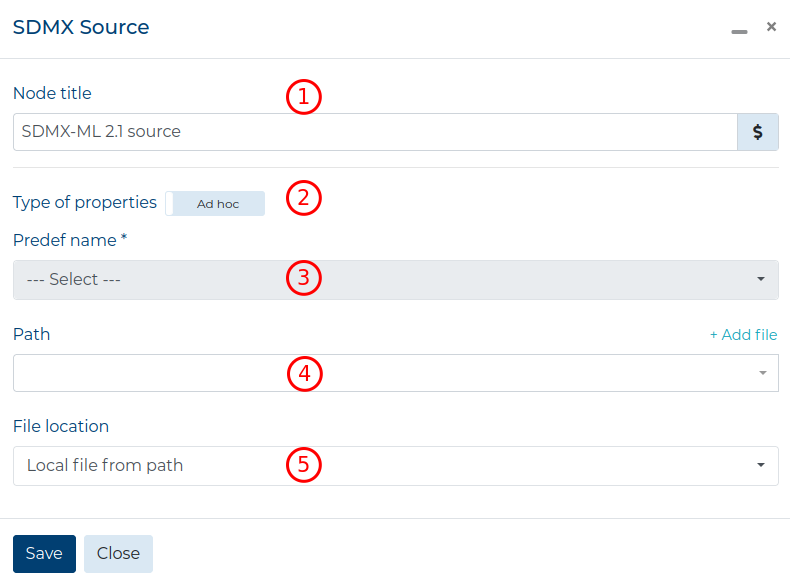

SDMX-ML Data Source #

In the configuration window for the SDMX-ML data source, you need to fill out the following fields:

- Node title - the title of this Node that will be displayed on the Workspace and in the Data Preview window (Optional),

- Type of properties - select whether to use one of the Predefined Sources or create an ad hoc source connection,

- Predef name - the name of the Predefined Source; after

selecting one, all the predefined fields will be filled out automatically (Required when

Predefinedis selected), - Path/URL - the filepath in the Resources folder or URL address

of the file (Required when

Ad hocis selected), - File location - whether the SDMX-ML file is on the computer or on the

internet (Required when

Ad hocis selected).

All column types are set to string when extracting data using the SDMX-ML Source.